Roundup of Google updates from January 2021

January 2021 served as the aftermath of the turbulent events that took place across 2020. Let's take a look at Google's updates and responses.

January 2021 served as the aftermath of the turbulent events that took place in the full rollout of December’s Google Broad Core Algorithm Update. Whilst site owners are still trying to figure out the full extent of the algorithm changes, Google experts and the search community are back to business as usual.

The Google Experts at our SEO agency in London continued it’s efforts to decipher the algorithm’s signals and pave the way to the upcoming Core Web Vitals that will give the user experience a whole other meaning in the search spectrum.

Google’s spokespersons and search pundits discussed and shared insights that once again debunked some of the various commonly-held beliefs in SEO. We came to find out that embedded videos such as Youtube videos, might bear the same SEO value as uploaded content.

We were also thrilled to find out that high traffic pages are being stored in RAM whereas the majority of indexed pages in hard disk drives (HDD) making us fathom the gigantic proportions of Google’s storage capabilities as well as limitations.

New Price Drop rich results will show in search results pages (SERPs) for product-related queries as Google keeps on helping eCommerce sites and Googlebot has started crawling sites with HTTP/2 protocol.

Last but not least Google made clear that Core Web Vitals ranking benefits will only be possible if all three of the new feature benchmarks are met as of May 2021.

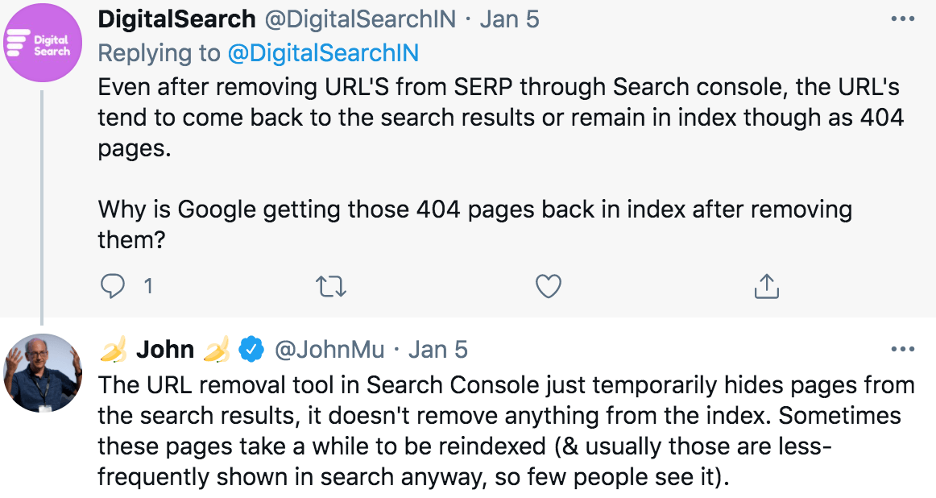

5th Jan: Google on what the Search Console URL removal tool does

Google’s usual suspect John Mueller answers a Twitter question about what the Search Console URL Removals Tool does.

The question’s context was around a hacked site that even though spammy URLs were removed, the search results kept on returning and indexing the removed URLs.

It’s a great frustration for a site owner to see their site getting hacked and after removing the spam URLS, Google keeps on showing them in search results.

The disgruntled question was based on the common perception that using the URL Removal Tool one can remove the URL from both the SERPs and the index once and for all. This doesn’t seem to be quite the case though.

The URL removal tool in Search Console just temporarily hides pages from the search results, it doesn’t remove anything from the index.

John Mueller, Google

Sometimes these pages take a while to be reindexed (and usually those are less-frequently shown in search anyway, so few people see it).

In fact, Google’s Webmaster Support page for the URL Removals Tool explicitly mentions numerous times that the tool “temporarily” blocks URLs on a site.

Truth is that this Tweet clarified things, as many in the Search community were under the false impression that the Search Console URL removal tool removes pages permanently.

8th Jan: Google’s John Mueller on whether using disavow tells that a site is shady

John Mueller from Google answers on whether a link disavow could send negative signals to Google about the site uploading it. In that day’s Google Office Hours Hangout John Mueller casts light on whether disavows raise flags to Google about the legitimacy of a site.

So, how does link disavow work?

There is a Google Search Console tool called link disavow tool which publishers use to tell Google to ignore certain links of their websites. The link disavow tool was introduced after the SEO community asked for it in order to be able to deal with sites that were impacted by the mid-2000’s Penguin algorithm update.

The original use of the disavow tool was for cleaning up a site’s backlink profile that has been part of link schemes. A tactic of buying links pointing to a site which is something that goes against Google’s guidelines.

The question and the whole discussion of that early January Friday was premised on the the well-founded assumption that filling a disavow is like saying Google your site is suspicious.

Question asked to Mueller:

After the disavow tool is used, does a domain carry any mark that… may hold it back?

After taking some seconds to gather his thoughts, Mueller replied:

No, no, the disavow tool is purely a technical thing, essentially, that tells our systems to ignore these links. It’s not an admission of guilt or any kind of bad thing to use a disavow tool.

John Mueller, Google

It’s not the case that we would say, well, they’re using the disavow tool, therefore they must be buying links. It’s really just a way to say, well… I don’t want these links to be taken into account.

Sometimes that’s for things that you just don’t want Google to take into account for whatever reason. And both of those things are good situations, right? It’s like you recognize there’s a problem and this is a tool that you can use to resolve that. And that’s not a bad thing.

So it’s not the case that there is any kind of a red mark or any kind of a flag that’s passed on just because a website has used the disavow tool.

We could all concur that Mueller’s confidence can reassure us that Google will not penalise us after using the disavow link tool to avoid unwanted links.

13th Jan: Google: the Googlebot is now crawling HTTP/2

Google’s Gary Illyes sends notifications to let webmasters/sites know that Googlebot is crawling their site with the new HTTP/2 protocol. The announcement was made via Twitter.

What is HTTP/2?

HTTP/2 is the most updated network protocol servers, bots and browsers can use to transfer data faster and safer than HTTP/1.1 protocol.

Lighter server loads, in turn, result in fewer site crawling errors and faster sites for users to access. This is something wonderful for the overall user experience which is something Google is highly interested in.

In order to crawl with HTTP/2, a site has to have a server ready to handle the improved protocol.

Google also made clear that not all sites are eligible or benefited from the HTTP/2 protocol:

“In our evaluations, we found little to no benefit for certain sites (for example, those with very low qps) when crawling over h2. Therefore we have decided to switch crawling to h2 only when there’s clear benefit for the site. We’ll continue to evaluate the performance gains and may change our criteria for switching in the future.”

19th Jan: Google uses faster storage for high traffic pages

On the Tuesday Search Off The Record podcast, Google’s Gary Illyes reveals that the search index uses a tiered structure where high-traffic content is indexed to faster and pricier storage.

Discussing the language complexities in search index selection, Illyes explained how content is indexed on three types of storage:

- RAM (Random Access Memory): fastest and most expensive

- SSD (Solid State Drive): very fast but quite expensive

- HDD (Hard Disk Drive): slowest and less costly

It turns out that Google reserves the quickest storage of documents that are likely to be used in search results on a more frequent basis than others.

And then, when we build our index, and we use all those signals that we have. Let’s pick one, say, page rank, then we try to estimate how much we would serve those documents that we indexed.

Gary Illyes, Google

..So for example, for documents that we know that might be surfaced every second, for example, they will end up on something super fast. And the super-fast would be the RAM. As part of our serving index is on RAM.

Then we’ll have another tier, for example, for solid-state drives because they are fast and not as expensive as RAM. But still not– the bulk of the index wouldn’t be on that. The bulk of the index would be on something that’s cheap, accessible, easily replaceable, and doesn’t break the bank. And that would be hard drives or floppy disks.

The insight we got from this podcast is quite fascinating especially from an SEO standpoint. Even though it’s impossible to know what storage tier a site is indexed on, it’s good to know that all indexed pages are competing on a tier-based indexing storage speed system.

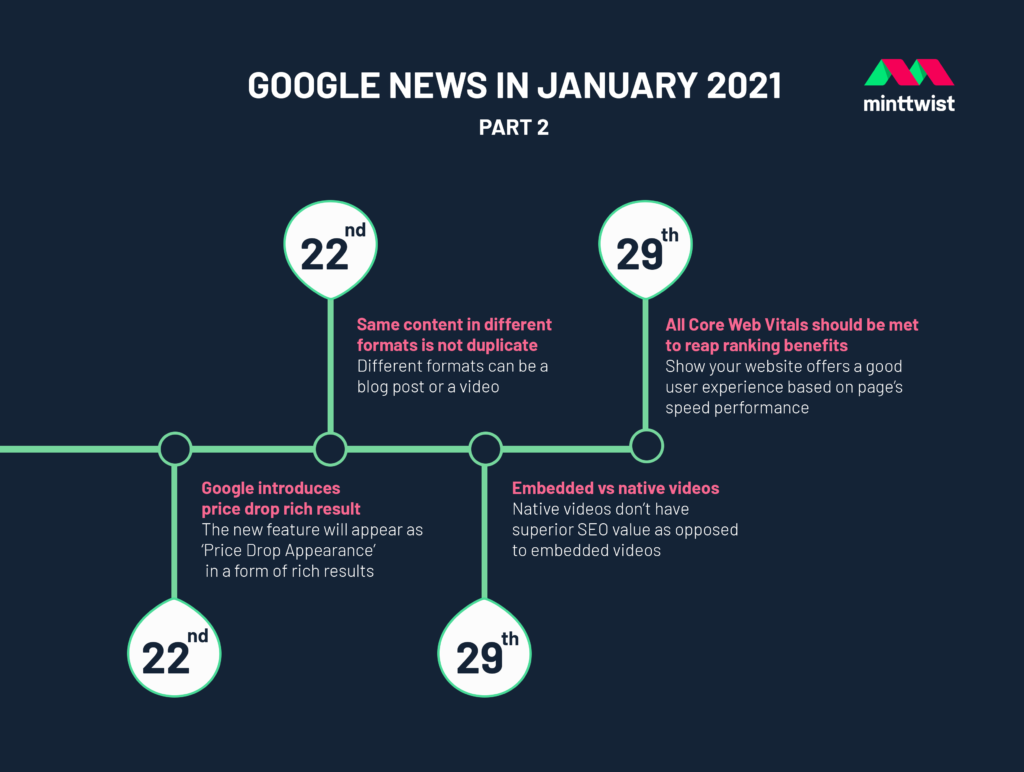

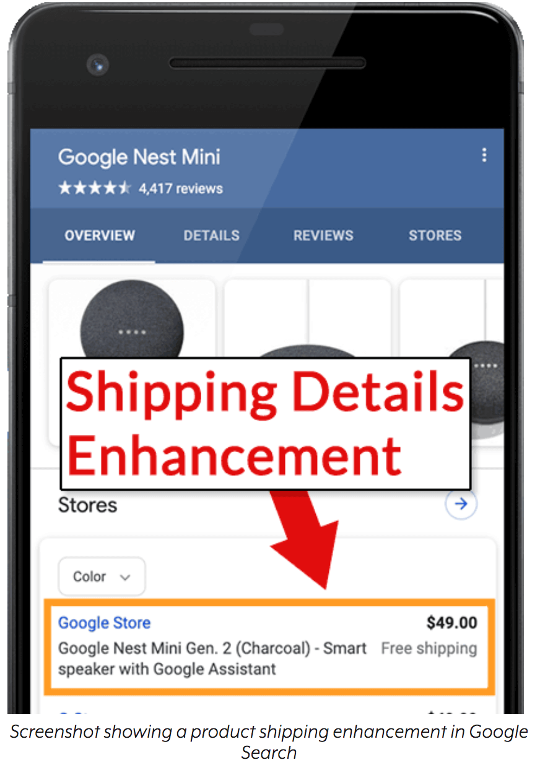

22nd Jan: Google introduces price drop rich result

Google updates the Product Structured Data documentation with a new Price Drop search feature for Product pages.

The new feature will appear in a form of rich results with the name Price Drop Appearance.

So what do we call rich results?

Rich results is a type of enhanced highlighted search results pages (SERPs) that stand out on the search listing and tend to attract more clicks to a site. In order to become eligible for appearing in Google search with rich results, the URL structure has to follow specific structured data types.

The Schema.org structured data, developed by Google and other major search engines, present a page’s content in a detailed way and help search engines to better understand what the page is all about. Google publishes developer pages instructing publishers, in this case, eCommerce stores, which structure data to use depending on the type of content.

Product structured data can appear in the form of product images, review stars, and shipping information, and special offers and others. The new rich result introduced by Google is called Price Drop Appearance and is related to the Product Structured Data.

Google monitors the price used for the structured data type of ‘offer’ Schema.org with the structured product data used on the product page. Every time the product price changes, Google adds a rich result called a “price drop” that signals a price drop.

Price drop: Help people understand the lowest price for your product. Based on the running historical average of your product’s pricing, Google automatically calculates the price drop. The price drop appearance is available in English in the US, on both desktop and mobile.

Google

To be eligible for the price drop appearance, add an Offer to your Product structured data. The price must be a specific price, not a range (for example, it can’t be $50.99 to $99.99).

22nd Jan: Google says same content in different formats is not duplicate

In that Friday’s Google Search Central office hours a site owner said that they noticed that when a blog article is repurposed as a video it tends to not rank in Google.

Google’s John Mueller saysidentical content published in different formats is not duplicate content. Different formats can be a blog post or a video.

Interestingly enough, Mueller pointed out that duplicate content is not that big of a deal as webmasters and SEOs are presenting it to be.

Question:

I have a YouTube channel with 9,000 subscribers and I also have a blog. Sometimes I write a blog post and use the same text I create YouTube videos with. Is this content duplication because Google can understand videos?

I’m saying that because my two blog posts are crawled but they’re not ranking in Google. Even when you put the direct link in Google Search it’s not there. Other blog posts without a video are ranking without a problem. Would it help to use a canonical tag, delete the blog post, or delete the video?

Mueller replied that Google does not have the capability to read video text and then map it to the web pages. If the video text is the exact same as a blog post this is still considered a different format of content and will not be seen as duplicate.

Mueller simply explained that people can search for an article or a video and this doesn’t mean Google would choose one over the other just because they bear the same content.

Also, with regards to duplicate content, if you had the same content in textual form on your website where it’s clearly duplicate content then what would happen there is we would pick one of those versions to show in Google Search.

John Mueller, Google

It’s not the case that we would say ‘this website has some duplicate content, we will not show it at all in Google’. Rather we will say ‘there are two versions here. We will pick one of these to show and we will just not show the other one.’

So that’s something where even when we do recognise duplicate content it’s not the end of the world. It’s really just a matter of us saying we don’t want to show the same thing multiple times to users in the search results. So we will pick one and we will show that one.

29th Jan: Google: embedded videos of equal SEO value to the uploaded content

Google Search Central SEO office-hours on January 29 and John Mueller debunks another wide-spread common belief that native videos have superior SEO value as opposed to embedded videos.

In a relevant discussion of the Friday stream, Mueller confirmed that videos embedded from other sources come with the same SEO value as native videos. Native videos are the ones uploaded directly on a platform.

The topic came up multiple times during the Google Search Central SEO office-hours.

Question:

Is there any difference between embedding and uploading a video from an SEO point of view?

Mueller suggested that it’s essentially the same and that Google treats both the same in terms of SEO.

Mueller went on saying that even when a site hosts their own videos it’s very common that videos are served via another CDN (content delivery network) which is technically a different website.

Mueller said that the main objective is to get the content indexed and ensure user experience is delivered.

It’s essentially the same. It’s very common that you have a separate CDN (content delivery network) for videos, for example, and technically that’s a separate website. From our point of view if that works for your users, if your content is properly accessible for indexing then that’s perfectly fine.

John Mueller, Google

As expected, a response like this sparked a series of questions from Mueller’s discussion participants.

Robb Young mentioned that there’s a common belief that is better when hosting your own videos as it allows your pages to show up when the video is searched for. Whereas embedding videos from YouTube, often results in YouTube showing up as the source for the content instead of your site.

Robb Young questioned Mueller if this is still the case with YouTube:

It depends. With YouTube you have two video landing pages. You have the landing page on youtube and you have the landing page on your site. We have to figure out which one of these pages to show and it can happen that we show your site as the video result landing page just because we have more information there perhaps.

John Muelle, Google

It can also be that we show the YouTube landing page because we have more signals or more information there. So that’s something where it’s not automatically the case that we would show the YouTube landing page.

Some other video platforms have their own landing pages that they create automatically, some video hosting platforms don’t do that at all, essentially that’s kind of up to you there.

29th Jan: Google: all Core Web Vitals should be met to reap ranking benefits

In the Google Search Central SEO office-hours on 29th January, John Mueller confirms that all three Core Web Vitals benchmarks should be met in order to reap the benefits of the ranking signal update rolling out in May 2021.

The topic came up when someone asked if one of the Core Web Vitals can be overlooked when the other two are met and if it really matters if Google’s tool shows one Core Web Vital yellow rather than all three green.

John Mueller went crystal clear stressing out the significance of the Core Web Vitals launching in May and that meeting all three of them is of utmost importance.

Core Web Vitals

Core Web Vitals are Google’s latest criteria for determining whether a website offers a good user experience based on page’s speed performance on three different levels.

- Largest Contentful Paint (LCP): Measures the speed at which a page’s main content is loaded. This should occur within 2.5 seconds of landing on a page.

- First Input Delay (FID): Measures the speed at which users are able to interact with a page after landing on it. This should occur within 100 milliseconds.

- Cumulative Layout Shift (CLS): Measures how often users experience unexpected layout shifts. Pages should maintain a CLS of less than 0.1.

Google offers 6 different ways of measuring Core Web Vitals and more information can be sound here

John Mueller on Core Web Vitals

“My understanding is we see if it’s in the green and then that counts as it’s OK or not. So if it’s in yellow then that wouldn’t be in the green, but I don’t know what the final approach there will be.

There are a number of factors that come together and I think the general idea is if we can recognize that a page matches all of these criteria then we would like to use that appropriately in search ranking.

I don’t know what the approach would be where there are some things that are OK and some things that are not perfectly OK, like how that would balance out.”

Jon Mueller replied affirmatively, when asked if there will be more information coming out before the algorithm update rolls out.

He said that the general idea is to introduce a badge in search results for pages that meet all 3 of Google Core Web Vitals but that is something not yet decided.

The general guideline is we would also like to use this criteria to show a badge in search results, which I think there have been some experiments happening around that.

john Mueller, Google

And for that, we really need to know that all of the factors are compliant. So if it’s not on HTTPS then essentially even if the rest is OK then that wouldn’t be enough.

The way the Core Web Vitals will launch in May and how they’ll be shown in the SERPs is down to Google’s final decision and likely to be confirmed within the next two months. What we can take away from this discussion with confidence though, is that complying with all three of the CWV standards is the way to protect your site from missing out on search rankings as of May 2021.

More insights from the team