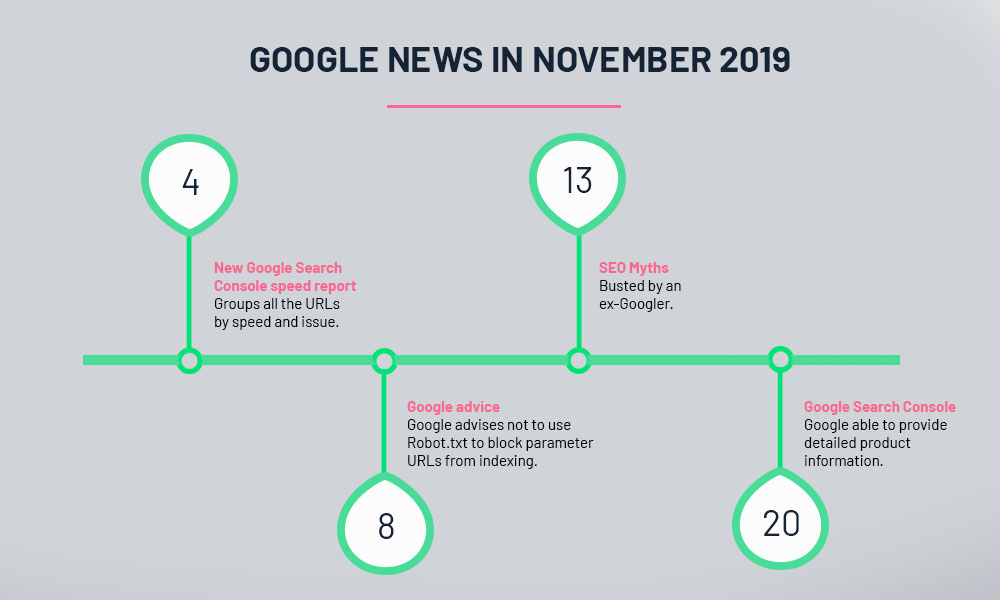

Roundup of Google updates from November 2019

Our monthly hit of Google updates

November hasn’t been one of the busiest months at Google. Previous months had way more activity, especially via Google Search Console new features. The SEO community has been keeping a close eye to the Google BERT update and its impact on the SERP.

After the September Core Update, SEOs have also been busy to revert the drops in the SERP by sticking to E-A-T guidelines and waiting for the next Core Update to see the effects of the changes. November has also had some Google Search ranking algorithm tremors as Google confirmed but nothing massive: “We did, actually several updates, just as we have several updates in any given week on a regular basis”.

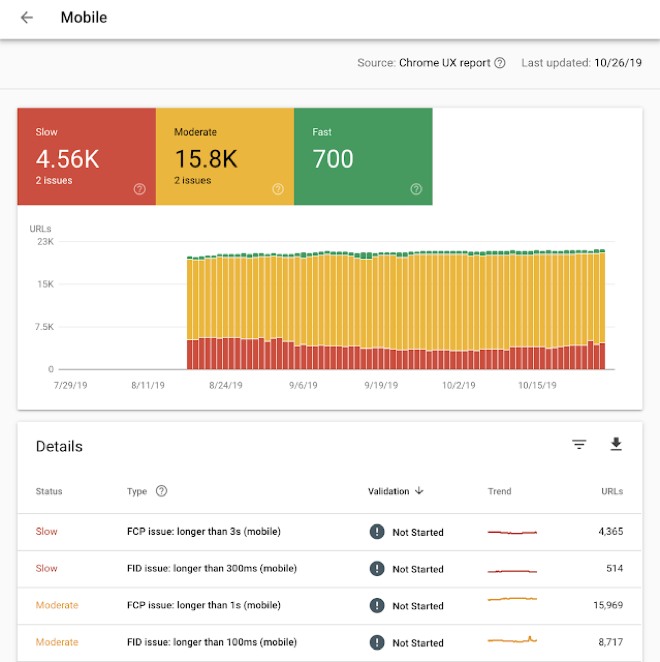

4th Nov – New Google Search Console speed report

A new addition to our GSC dashboard is the addition of a new Speed report that automatically assigns groups of similar URLs into “Fast”, “Moderate”, and “Slow” buckets.

The report groups all the URLs by speed and the issue so you can have an understanding of the speed downsizes your website is suffering.

Unfortunately, this report does not allow you to export the complete list of URLs but gives you an idea of the main problems your website is having.

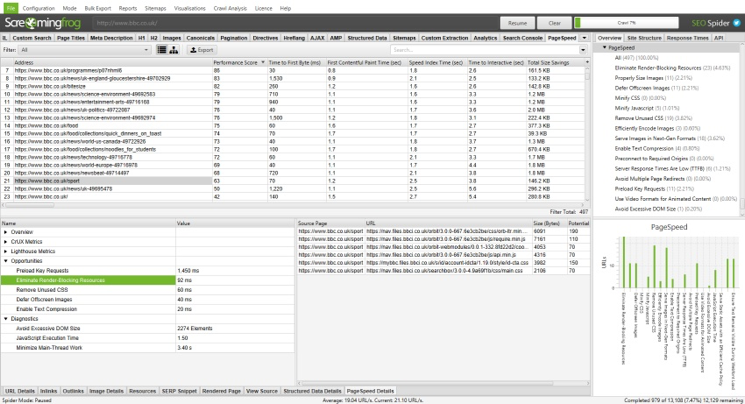

Screaming Frog recently launched the version 12.0 that incorporates PageSpeed insights with Lighthouse metrics that will allow you analyse every single URL and make smarter decisions related to page speed.

8th Nov – Google advises not to use Robot.txt to block parameter URLs from indexing.

John Mueller from Google was asked why many URLs blocked by robots.txt are still indexed and he advised not to use robots.txt to index URLs. Instead, we should use rel-canonicals.

From our experience, using canonicals it’s a good solution but so it is to use meta robots noindex tags, wait for Google to remove all the pages from the index and then block them from Rotots.txt. The reason behind this tactic is for Google not to visit anymore these pages and save the crawl budget.

13th Nov – SEO Myths busted by an ex-Googler

This is not a Google news but I thought it would be interesting to review some SEO myths, busted by former Google Search employee and SEO expert, Kaspar Szymanski.

- Myth #1: SEO is a level playing field

- Myth #2: SEO is a one-time project

- Myth #3: SEO is backlinks

- Myth #4: SEO is user signals

- Myth #5: Google hates my website

- Myth #6: Google AdWords has an impact on SEO

- Myth #7: Keywords are key

- Myth #8: SEO is ‘freshness’

- Myth #9: Social signals are an SEO factor

- Myth #10: SEO is magic

Find the complete article on Search Engine Land here.

20th Nov – New reporting for products results in Google Search Console

If you have added schema markup to your product pages, Google will be able to provide detailed product information in rich results. You will also be able to find a new search appearance type called “Products results” and review your monthly clicks, impressions, CTR and average position.

More insights from the team